Exploring AI-Image Generation with ComfyUI ComfyUI

A Technical Deep Dive into Workflows and Flux-1D Integration

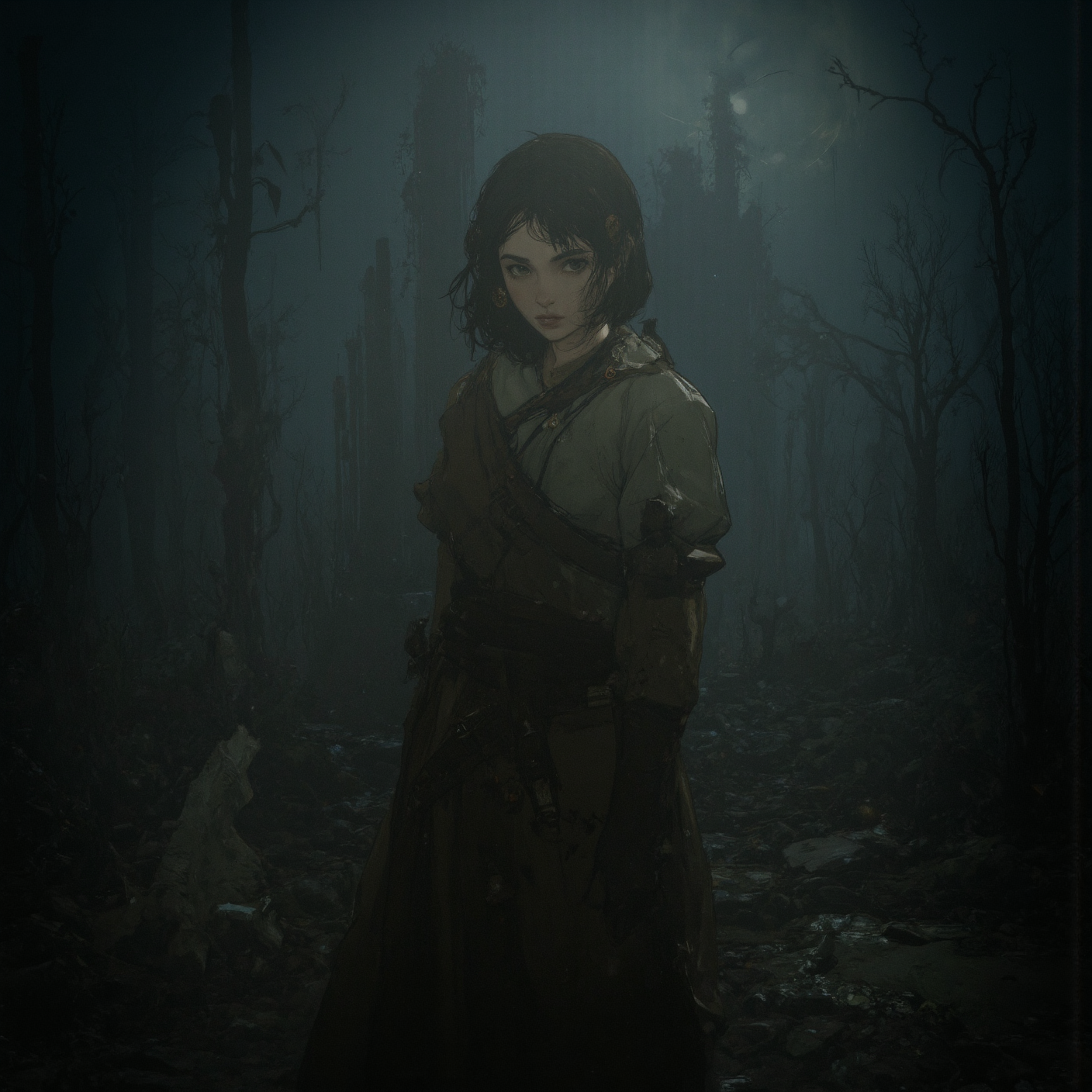

AI image generation has rapidly evolved into a cornerstone of creative and technical workflows, enabling users to generate stunning visuals with unprecedented ease and precision. Among the tools available, ComfyUI has emerged as a powerful and flexible interface for managing complex AI workflows. In this blog post, we’ll take a technical deep dive into ComfyUI, its architecture, and how it can be used to integrate models like Flux-1D for image generation. This is not about promoting a specific combination but rather about understanding ComfyUI as a tool and how it can be adapted to work with models of your choice, such as Flux-1D.

What is ComfyUI?

ComfyUI is a modular and extensible user interface designed to simplify the process of working with AI models, particularly those involved in image generation. Unlike traditional interfaces that abstract away the underlying mechanics, ComfyUI provides a node-based workflow system that allows users to visually construct and customize their AI pipelines. This makes it an ideal tool for researchers, developers, and creators who want fine-grained control over their workflows.

At its core, ComfyUI is built to be model-agnostic. It doesn’t favor any specific model architecture but instead provides a framework for integrating and experimenting with a wide range of AI models, including diffusion models, GANs, and more. This flexibility is one of its greatest strengths, allowing users to tailor their workflows to their specific needs.

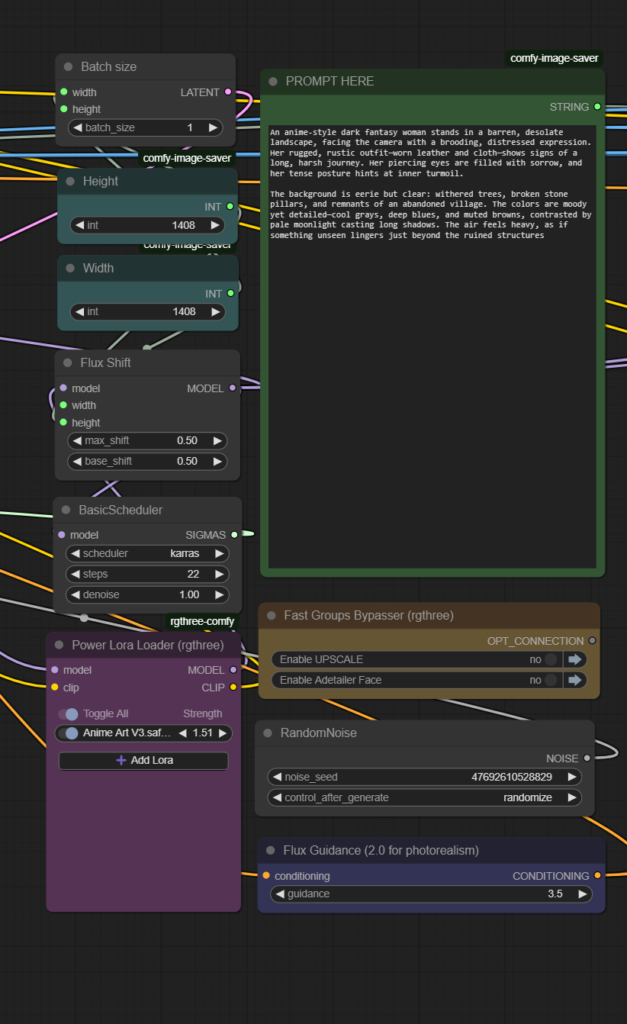

ComfyUI’s Architecture: A Node-Based Workflow System

ComfyUI’s power lies in its node-based architecture. Each node represents a specific function or operation, such as loading a model, preprocessing inputs, or generating outputs. These nodes can be connected in various configurations to create custom workflows. Here’s a breakdown of some key components:

Model Loader Node:

This node is responsible for loading the AI model into the workflow. Whether you’re using Flux-1D, Stable Diffusion, or any other model, this node ensures seamless integration.Input Nodes:

Input nodes allow users to define parameters such as image resolution, noise levels, or textual prompts. These parameters serve as the starting point for the generation process.Processing Nodes:

These nodes handle the core operations, such as running the diffusion process or applying transformations. For example, when using Flux-1D, the processing node would manage the reverse diffusion steps to generate the final image.Output Nodes:

Once the image is generated, output nodes can be used to save, display, or further process the result. Additional nodes for upscaling, color correction, or style transfer can also be added here.Custom Nodes:

ComfyUI supports the creation of custom nodes, enabling users to extend its functionality. This is particularly useful for integrating experimental models or adding unique post-processing steps.

Integrating Flux-1D with ComfyUI

While ComfyUI is model-agnostic, I’ve chosen to use Flux-1D in my workflows due to its efficiency and unique approach to image generation. Flux-1D is a diffusion model that leverages one-dimensional latent representations to streamline the generation process. Here’s how it fits into a ComfyUI workflow:

Loading the Model:

The first step is to load the Flux-1D model using ComfyUI’s model loader node. This node reads the model weights and prepares it for inference.Configuring Inputs:

Next, input nodes are used to define the parameters for the generation process. This includes setting the initial noise pattern, specifying the desired image dimensions, and providing any textual prompts or conditioning data.Running the Diffusion Process:

The processing node takes over, executing the reverse diffusion steps defined by Flux-1D. This involves iteratively refining the noise into a coherent image. The modular nature of ComfyUI allows users to monitor and tweak this process in real-time.Post-Processing:

Once the image is generated, additional nodes can be used to enhance the output. For example, an upscaling node can increase the resolution, while a color correction node can adjust the tonal balance.Exporting the Result:

Finally, the output node saves the generated image in the desired format. ComfyUI also supports batch processing, making it easy to generate multiple images in a single run.

Why ComfyUI Stands Out

ComfyUI’s strength lies in its flexibility and transparency. Unlike black-box tools that hide the underlying mechanics, ComfyUI provides full visibility into the workflow, allowing users to understand and optimize every step. Here are some key features that make it stand out:

Modularity: The node-based system allows users to build and modify workflows with ease.

Extensibility: Custom nodes and plugins enable users to add new functionality or integrate experimental models.

Efficiency: ComfyUI is optimized for performance, ensuring that even complex workflows run smoothly.

Community Support: An active community contributes plugins, tutorials, and troubleshooting resources, making it easier to get started and solve problems.

Technical Considerations for Using Flux-1D in ComfyUI

While Flux-1D is a powerful model, integrating it into ComfyUI requires attention to a few technical details:

Model Compatibility:

Ensure that the Flux-1D model is compatible with ComfyUI’s architecture. This may involve converting the model weights or writing a custom node to handle specific operations.Resource Management:

Diffusion models can be resource-intensive. ComfyUI allows users to optimize resource usage by adjusting batch sizes, resolution, and other parameters.Latent Space Manipulation:

Flux-1D’s one-dimensional latent representations offer unique opportunities for experimentation. ComfyUI’s modular design makes it easy to explore these possibilities, such as interpolating between latent vectors or applying transformations.Debugging and Optimization:

ComfyUI’s transparent workflow system simplifies debugging and optimization. Users can monitor the output at each node and identify bottlenecks or errors.

Conclusion

ComfyUI is a versatile and powerful tool for AI image generation, offering unparalleled flexibility and control over workflows. Its node-based architecture makes it suitable for a wide range of applications, from research and development to creative projects. While I’ve chosen to use Flux-1D for its efficiency and unique approach, ComfyUI’s model-agnostic design ensures that it can adapt to any model or workflow you prefer.

Whether you’re a researcher exploring new AI techniques or a creator pushing the boundaries of digital art, ComfyUI provides the tools you need to bring your ideas to life. Its modularity, extensibility, and transparency make it a standout choice in the world of AI-driven image generation.

Leave a Reply